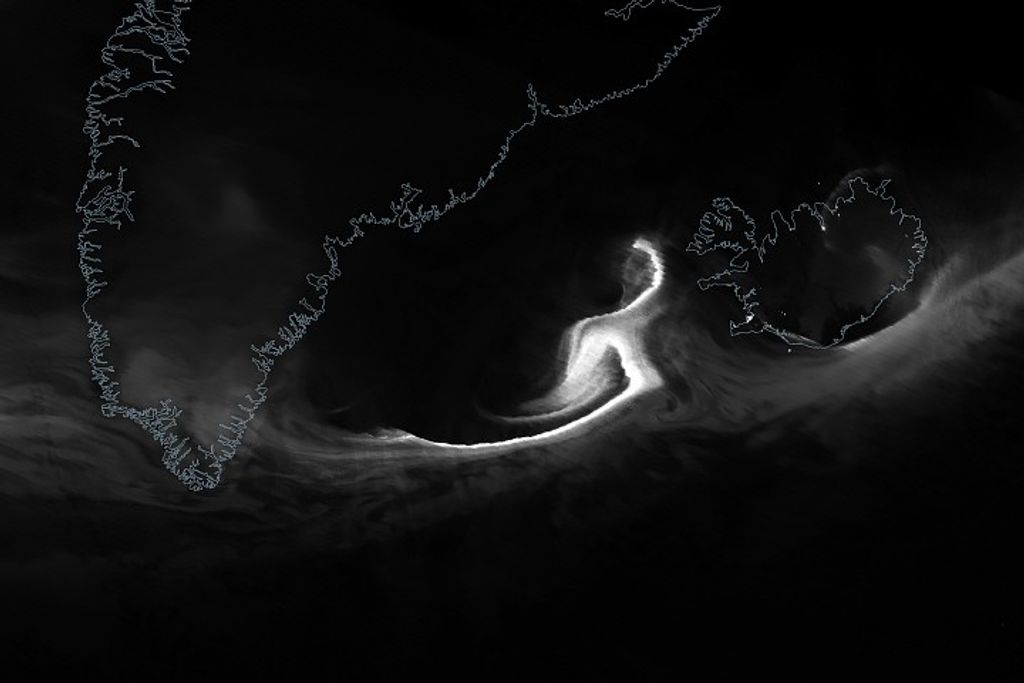

Oceans cover over 70% percent of Earth’s surface and profoundly influence our planet’s atmosphere, weather, and climate. However, uncovering the many secrets hidden beneath the ocean’s waves presents unique challenges for researchers, and requires specific technology to observe what humans can’t see.

NASA technologists are developing sensors that can improve measurements of Earth’s oceans, creating new instruments to study aspects of our home planet we haven’t before been able to research.

Imaging what’s below the ocean surface requires the development a new instrument capable of improving the information available to scientists. Ved Chirayath, a scientist at NASA’s Ames Research Center says, “Images of objects under the surface are distorted in several ways, making it difficult to gather reliable data about them.”

Chirayath has a technology solution. It’s called fluid lensing.

“Refraction of light by waves distorts the appearance of undersea objects in a number of ways. When a wave passes over, the objects seem bigger due to the magnifying effect of the wave. When the trough passes over, the objects look smaller. Fluid lensing is the first technique to correct for these effects.”

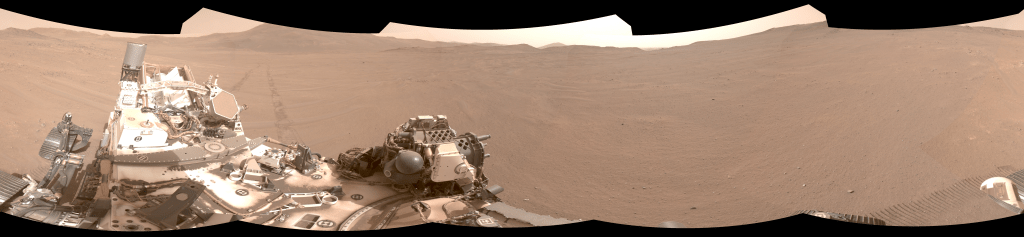

Without correcting for refraction, it’s impossible to determine the exact size or extent of objects under the water’s surface, how they’re changing over time, or even precisely where they are. Chirayath developed a special camera called FluidCam that uses fluid lensing to see beneath the waves and capture terabytes worth of 3D images at ½ cm resolution, snapping imagery from aboard an Unmanned Aerial Vehicle (UAV).

The key to fluid lensing lies in the unique software Chirayath developed to analyze the imagery collected by FluidCam.

He explains that “This software turns what would otherwise be a big problem into an advantage -- not only eliminating distortions caused by waves but using their magnification to improve image resolution.”

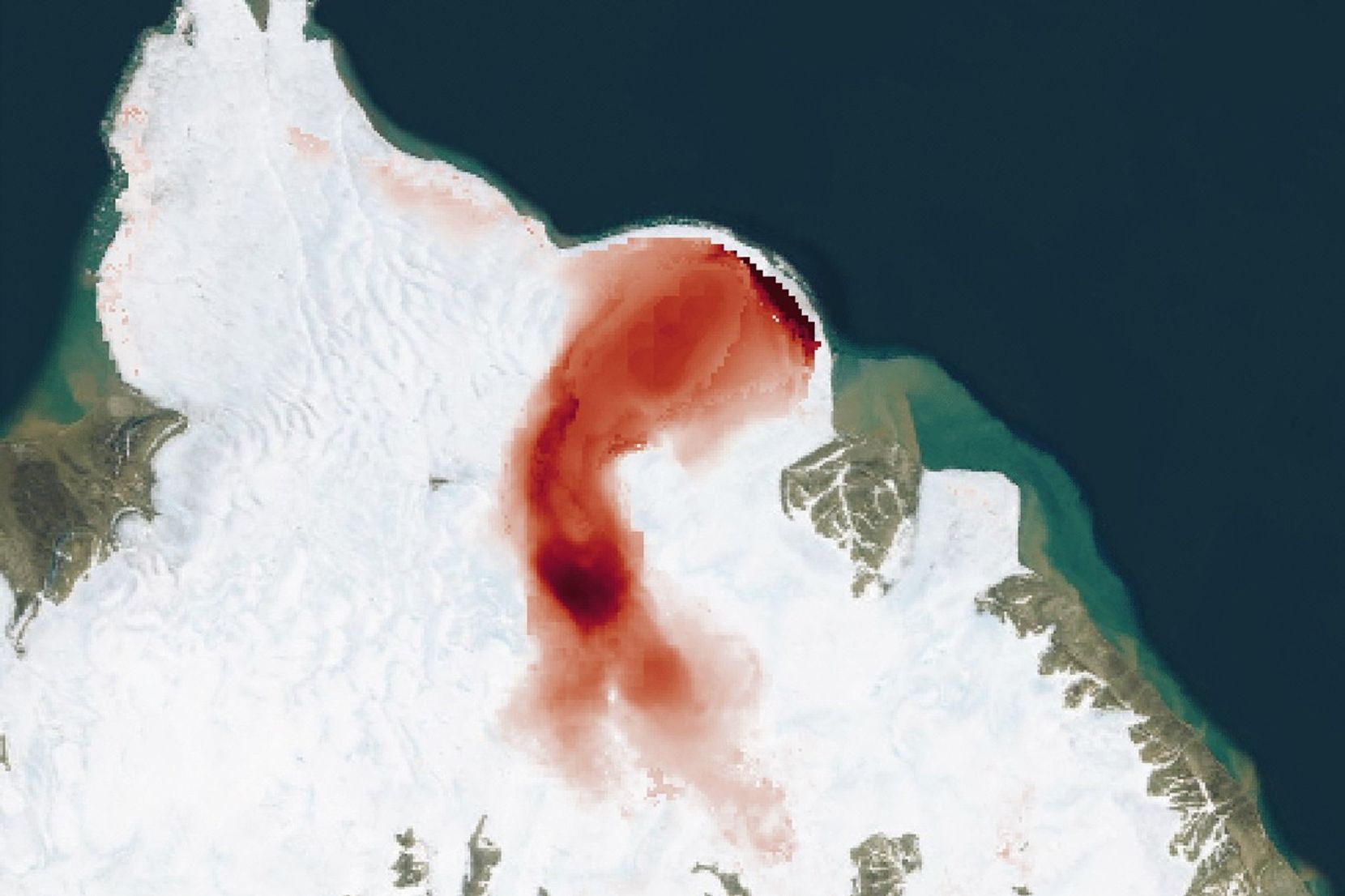

He is focusing FluidCam on coral reefs, the health of which have been significantly degraded due to pollution, over-harvesting, increasing ocean temperatures and acidification, among other stressors.

To understand how the reefs are affected by environmental and human pressures, and to work with resource managers to help identify how to sustain reef ecosystems, researchers need to determine how much healthy reef area exists now. Fluid lensing could help researchers establish a high resolution baseline of global reef area worldwide by augmenting datasets from multiple NASA satellites and airborne instruments. This effort will help identify the effects of environmental changes on these intricate, life-filled ecosystems.

Chirayath and his team designed special software to teach supercomputers how different conditions – such as different sizes of waves – affect the images captured. The computers combine data from multiple airborne and satellite datasets and identify objects in the images accordingly, distinguishing between what is and is not coral and mapping it with 95% greater accuracy than any previous efforts.

He says, “We created an observation and training network called NeMO-Net through which scientists and members of the public can analyze imagery captured by FluidCam and other instruments to help classify and map coral in 3D. This is the database we use to train our supercomputer to perform global classifications.”

Chirayath is working toward a space-based FluidCam. From orbit the camera could map coral reef ecosystems globally and help researchers better understand the overall health of coral reefs.

To learn more about the amazing technologies NASA uses to explore our planet, visit science.nasa.gov.